Software developers spend roughly equal time on meetings (12%) and coding (11%), with the remaining time distributed across debugging, architecture, reviews, and operational tasks. This fragmentation correlates with decreased productivity and satisfaction when it creates a gap between actual and ideal time allocation (Kumar et al., 2025).

Traditional workflows treat implementation as the bottleneck, forcing a costly trade-off: explore multiple solutions (expensive) or ship the first working approach (fast but suboptimal). Large-scale projects face 50+ key decisions where “it’s simply infeasible to thoroughly research every single decision” before implementation (Backlund, 2024). The real constraint isn’t typing speed. It’s decision quality under fragmented time.

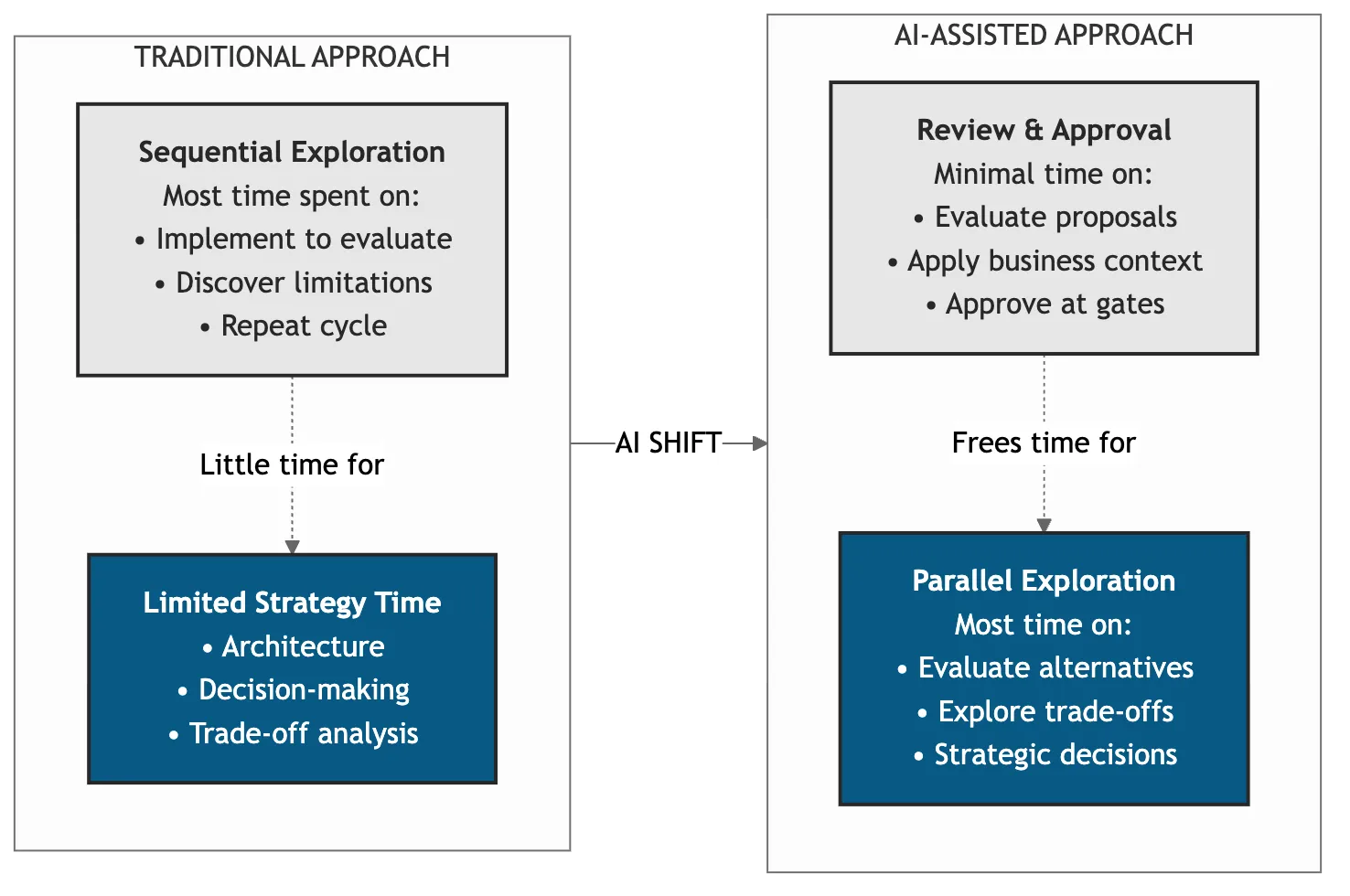

AI-assisted development breaks this trade-off. Implementation becomes a review task. Expert judgment focuses on strategic decisions.

Table of contents

Contents

How Does AI-Assisted Development Shift Time Allocation?

AI assistants shift the focus from implementation to judgment. You spend minimal time reviewing code and most time applying strategic thinking.

Controlled studies show 55% faster task completion with AI assistance. The gain isn’t typing speed. It’s preserving cognitive capacity for critical thinking.

Critical thinking scales. Implementation doesn’t. You can evaluate 3 architectural approaches in the time it takes to implement one.

Example: Goose (open-source AI assistant) handles codebase analysis, implementation, testing, and documentation. You provide business context, architectural judgment, and approve decisions at critical gates.

Figure 1: Traditional approach focuses on implementation overhead, AI-assisted approach maximizes strategic thinking

Figure 1: Traditional approach focuses on implementation overhead, AI-assisted approach maximizes strategic thinking

Real Example: CI Modernization

Context: math-mcp-learning-server had no CI workflow. Legacy mypy was slow and unused.

The judgment call: Build CI from scratch or adapt patterns from a similar project?

AI identifies reusable patterns: Ruff (linter/formatter) + uv (package manager) + pytest-cov. I review the risk assessment, verify the tooling choices match project needs, and confirm zero regressions.

Results:

- ~20 minutes vs 3-4 hours from scratch

- CI runtime: 5 seconds (Ruff is 10-100x faster than legacy tooling)

- 67 tests passing, 83% coverage

Source: PR #52 - Add modern CI workflow

Real Example: Matrix Operations Feature

Context: math-mcp-learning-server needed 5 matrix operation tools with NumPy integration.

The judgment call: Implement incrementally (one tool per PR) or batch with shared validation patterns?

AI identifies common infrastructure: dimension validation, ToolError handling, DoS prevention via size limits. I review API design, error handling conventions, and security limits.

Results:

- 2 minutes from PR creation to merge

- 5 tools, 21 tests, 395 lines added

- Patterns reusable for future tools

Source: PR #109 - Implement 5 matrix operation tools

What Business Value Does AI-Assisted Development Deliver?

Decision Quality

The fundamental shift is from “implement first, evaluate later” to “evaluate first, implement once”. With AI handling implementation, you can explore 2-3 alternatives per decision instead of committing to the first working approach. Research confirms “the best solution must first become a candidate before being selected… More candidates increase the likelihood the ideal solution is among them.”

Time to validated options drops from 1.5-6 hours to 15 minutes to 1 hour. Architectural reversals decrease because upfront analysis improves.

Senior Engineer Leverage

| Metric | Traditional | AI-Assisted | Business Impact |

|---|---|---|---|

| Time allocation | Implementation-heavy | Judgment-focused | Maximize expert leverage |

| Scope per engineer | 1-2 specialties | Full stack | Eliminate specialist bottlenecks |

| Exploration cost | High (must implement) | Low (preview and abandon) | Ship best solution, not first |

Table 1: Comparison of senior engineer time allocation and scope between traditional and AI-assisted approaches

This transformation aligns with research on AI-assisted development: GitHub studies found 60-75% of developers report increased job fulfillment and 87% preserve mental effort on repetitive tasks when using AI coding assistants.

Measured Time Savings

| Task | AI-Assisted | Traditional | Savings |

|---|---|---|---|

| CI modernization | ~20 minutes | 3-4 hours | ~90% |

| Matrix operations (5 tools) | 2 minutes | 1-2 hours estimated | ~95% |

| DNS migration | 2 hours | 4-6 hours | ~60% |

Table 2: Measured time savings across infrastructure and DevOps tasks

Industry research validates these gains: 26% overall productivity increase across 4,867 developers, with 30-50% time savings on repetitive tasks in enterprise settings.

At 10 infrastructure tasks per month, this recovers ~60 hours per year per engineer. That is 1.5 weeks of productive time returned to strategic work.

Strategic Impact

| Outcome | Shift | Business Value |

|---|---|---|

| Engineer capability | 1-2 specialties → Full-stack | Eliminate specialist bottlenecks |

| Production risk | Manual review → AI + gates | Governance without slowdown |

| Knowledge retention | Tribal → Codified recipes | Team continuity |

| Onboarding time | Weeks → Hours | Faster scaling |

Table 3: Strategic outcomes from traditional to AI-assisted workflows

How Do Recipes Codify Engineering Judgment?

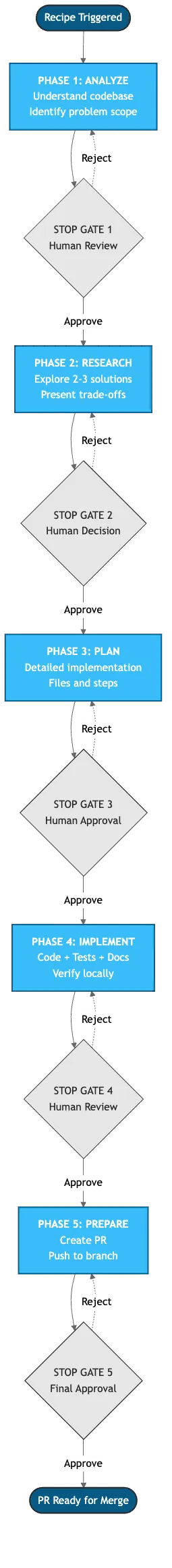

Goose uses “recipes”: YAML workflow definitions that codify your judgment and process. The key innovation is mandatory STOP points where AI proposes and you approve before proceeding.

5-phase workflow:

- ANALYZE - Understand codebase and problem

- RESEARCH - Explore 2-3 solution approaches with trade-offs

- PLAN - Detailed implementation plan

- IMPLEMENT - Code, tests, documentation

- PREPARE - Create PR, verify branch, push

Figure 2: Recipe workflow enforces governance through 5 mandatory approval gates - AI proposes, human judges

Figure 2: Recipe workflow enforces governance through 5 mandatory approval gates - AI proposes, human judges

This matters for four reasons. First, repeatable process replaces ad-hoc prompting. Second, audit trails capture every decision in PR history. Third, human judgment gates ensure governance without blind automation. Fourth, codified expertise becomes an onboarding tool.

Example GATE pattern:

## Phase 1: RESEARCH

Understand scope and constraints:

- Read issue/PR description, linked discussions

- Identify affected files with `rg` and `analyze`

- Note CI requirements, test patterns, coding standards

### GATE: Research Summary

**STOP - Present to user:**

- Problem statement (1-2 sentences)

- Affected files and scope

- Constraints discovered (CI, tests, dependencies)

- 2-3 possible approaches with trade-offs

**ASK:** "Which approach do you prefer?"~/.config/goose/recipes/goose-coder.yamlCode Snippet 1: GATE pattern from production recipe. AI presents constrained options, human selects direction before any code is written.

Branch hygiene is enforced by global githooks: pre-push blocks protected branches, commit-msg requires conventional commits with DCO. The recipe ensures work starts on feature branches. Full recipe: goose-coder.yaml on GitHub Gist

# Conventional commit format

CONVENTIONAL_REGEX='^(feat|fix|docs|...)(\([a-z0-9_-]+\))?(!)?: .{1,100}$'

if ! echo "$COMMIT_MSG" | grep -qE "$CONVENTIONAL_REGEX"; then

echo "BLOCKED: Commit message must follow conventional format"

exit 1

fi

# DCO required

if ! grep -q "^Signed-off-by:" "$COMMIT_MSG_FILE"; then

echo "BLOCKED: Missing DCO (Signed-off-by)"

exit 1

fi~/.githooks/commit-msgCode Snippet 2: Global commit-msg hook enforces conventional commits and DCO. Githooks provide hard blocks; recipes provide guidance.

When Does This Approach Work?

| Task Type | AI-Assisted Fit | Evidence |

|---|---|---|

| CI/DevOps automation | High | 20 min vs 3-4 hrs (PR #52) |

| Feature implementation | High | 2 min for 5 tools (PR #109) |

| Boilerplate generation | High | Common pattern in both PRs |

| Greenfield architecture | Medium | More judgment gates needed |

Table 4: Task type fit for AI-assisted development based on production experience

Low-fit tasks include security-sensitive code (AI may miss edge cases), regex/parsing logic (subtle bugs compound), and legacy systems without documentation (AI lacks context).

Critical success factor: You must have expertise to evaluate proposals. AI amplifies judgment, it does not replace it.

The Transformation

Traditional software economics fragments expert time across operational overhead. When senior engineers spend only 11% of their time coding, the bottleneck isn’t implementation speed. It’s context-switching between debugging, reviews, meetings, and operational tasks.

AI-assisted development consolidates this fragmentation. AI handles the operational work (debugging patterns, boilerplate, documentation), freeing human attention for architectural decisions and strategic judgment. The scarce resource shifts from “time to implement” to “ability to decide.”

When implementation becomes cheap (minutes instead of hours), exploration becomes affordable. You can evaluate three approaches, prototype two, and ship the best one, all in less time than the traditional single-path approach.

For technical leaders, this amplifies your most expensive resource: expert judgment. When your bottleneck is making the right decision, not finding time to code, AI becomes a strategic multiplier.

References

- ACM, “Measuring GitHub Copilot’s Impact on Productivity” (2024) — https://cacm.acm.org/research/measuring-github-copilots-impact-on-productivity/

- Backlund, Emil, “A Cost-Based Decision Framework for Software Engineers” (2024) — https://www.emilbacklund.com/p/a-cost-based-decision-framework-for

- GitHub, “Research: Quantifying GitHub Copilot’s impact on developer productivity and happiness” (2024) — https://github.blog/news-insights/research/research-quantifying-github-copilots-impact-on-developer-productivity-and-happiness/

- GitHub Resources, “Measuring the Impact of GitHub Copilot” (2024) — https://resources.github.com/learn/pathways/copilot/essentials/measuring-the-impact-of-github-copilot/

- IEEE Computer Society, “Software Engineering Economics and Declining Budgets” (2024) — https://www.computer.org/resources/software-engineering-economics

- Kumar et al., “Time Warp: The Gap Between Developers’ Ideal vs Actual Workweeks in an AI-Driven Era” (2025) — https://arxiv.org/abs/2502.15287

- Pandey et al., “Evaluating the Efficiency and Challenges of GitHub Copilot in Real-World Projects” (2024) — https://arxiv.org/abs/2406.17910