Code reviews are a bottleneck. Engineering teams lose significant velocity waiting for feedback—time that compounds when security vulnerabilities escalate. Fixing a defect in production costs 30-100× more than fixing it during design (Boehm & Basili, 2001). The economics are unavoidable: shift left or absorb exponential costs downstream.

AI in CI/CD isn’t about replacing humans. It’s about giving your pipeline the intelligence to catch problems before humans see them. Do this in seconds, not hours.

Table of contents

Contents

- What Is the Real Cost of Manual Code Review?

- How Do You Integrate AI into CI/CD Without Prompt Injection Risk?

- Tier 2 and Tier 3: Speed vs. Security Trade-offs

- Evolution From Uncontrolled to Managed AI Analysis

- What Outcomes Can You Expect from AI-Augmented CI/CD?

- Your Next Step: Start With Tier 1

- References

What Is the Real Cost of Manual Code Review?

The Review Bottleneck

Your development team runs at the speed of your slowest code review. Code review bottlenecks are well-documented across engineering teams. Feedback loops stretch from hours to days while developers context-switch or wait on reviewers. Research from Forsgren et al. (2024) shows context-switching during code review significantly reduces developer productivity and satisfaction.

GitHub’s 2024 Octoverse showed median time from PR open to first review is 4 hours in large organizations, 22 hours in enterprises. AI summaries collapse that to under 3 minutes.

Traditional CI/CD handles this poorly. Your pipeline runs automated linters and security scanners, generates reports, then stops. A human reads the output, interprets it, decides if it matters, and either approves or comments. That handoff is where velocity dies. Eight-hour review windows become production delays. Critical insights get buried in noise. Studies confirm developers fear review delays will slow delivery, even though they recognize reviews’ long-term quality benefits (Santos et al., 2024). And the smarter your engineers, the more expensive this wait becomes.

The Security Cost Multiplier

The cost multiplier gets worse with security. IBM and Software Engineering Institute research confirms production fixes can be orders of magnitude more expensive than early detection—the exact multiplier depends on when the defect surfaces. The expenses compound: rework costs, deployment delays, and potential security incidents all increase exponentially downstream.

Shift-left automation means detecting issues before a PR merges, before a human even reviews the code. AI can analyze linter output, security scan results, and code patterns in seconds. Speed improves dramatically: 2-7 second analysis vs. 4-8 hour reviews. Developers get instant feedback, iterate faster, ship with confidence.

The Prompt Injection Risk

But raw AI analysis of code diffs introduces a critical vulnerability: prompt injection. If your CI/CD pipeline feeds user-submitted code directly to an AI model, an attacker can craft a PR with embedded instructions that manipulate the AI’s behavior. The AI might approve malicious code, disable security checks, or expose sensitive information. This isn’t theoretical—it’s a live attack surface in every AI-augmented system.

The solution is defensive architecture: build your CI/CD so AI never analyzes untrusted input directly. Instead, AI analyzes tool output: structured, deterministic results from linters, security scanners, and static analysis. Your pipeline becomes: linter runs first, generates JSON, AI summarizes the findings, human approves. Attack surface shrinks to zero.

This requires thinking in tiers. Not all systems have the same threat model. A private repo with a trusted five-person team can tolerate different risk than open-source accepting external contributors. We built three patterns that match different threat models and deliver the speed gains you need.

How Do You Integrate AI into CI/CD Without Prompt Injection Risk?

Tier 1 is immune to prompt injection. Your linter runs first, produces JSON output, and the AI analyzes only that structured data. The AI never sees the raw code, never processes user input, never runs in the context of potentially malicious diffs.

name: AI Analysis - Maximum Security

on: [pull_request]

permissions:

contents: read

jobs:

analyze:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v6

- name: Lint Code

run: pipx run ruff check --output-format=json . > lint.json || exit 0

- name: Setup Goose

uses: clouatre-labs/setup-goose-action@v1

- name: AI Analysis

env:

GOOGLE_API_KEY: ${{ secrets.GOOGLE_API_KEY }}

run: |

echo "Summarize these linting issues:" > prompt.txt

cat lint.json >> prompt.txt

# Only structured tool output appended. Never raw source code.

goose run --instructions prompt.txt --no-session --quiet > analysis.md

- name: Upload Analysis

uses: actions/upload-artifact@v5

with:

name: ai-analysis

path: analysis.mdtier1-maximum-security.ymlDefensive boundary: AI analyzes only JSON output from the linter, never raw code. See the full Tier 1 example

The workflow is simple. Linter runs, produces JSON. AI analyzes JSON. Results upload as an artifact. No posting to the PR, no automated approvals, no AI-driven decisions that affect the merge. A human reviews the AI’s summary before deciding what to do. Speed improves (2-5 minute feedback vs. 8-hour wait), security stays intact (zero injection risk), and you retain human judgment on what matters.

Use Tier 1 by default. It’s the safest pattern. Public repos should mandate it. Open-source projects must use it. External contributors change the threat model. Don’t accept that risk.

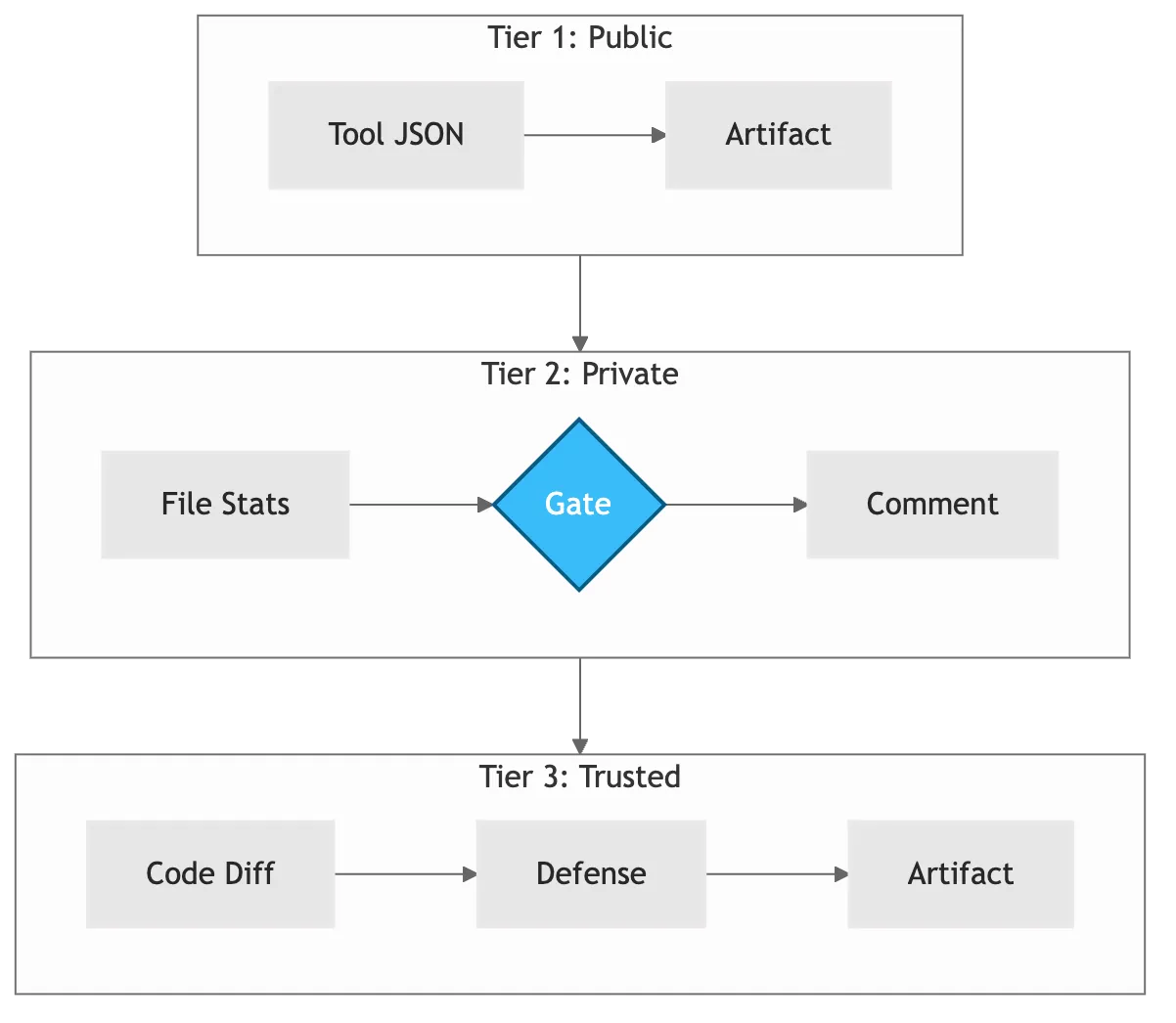

Figure 1: Tier 1 defensive pattern. AI analyzes tool output, never sees raw code. Immune to prompt injection.

Tier 2 and Tier 3: Speed vs. Security Trade-offs

Not every team needs Tier 1. Private repositories with trusted contributors can tolerate more AI context. That’s Tier 2: the AI sees file statistics and change scope, but not the full diff. It requires manual approval before posting results to the PR. The injection risk is low. The AI has less input to manipulate, but it’s not zero. Use Tier 2 when your team is internal and you trust everyone to follow security practices.

name: AI Analysis - Balanced Security

on: [pull_request]

permissions:

contents: read

jobs:

analyze:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v6

- name: Get Changed Files

id: files

run: |

git diff --name-only origin/main...HEAD > files.txt

wc -l files.txt >> summary.txt

- name: Setup Goose

uses: clouatre-labs/setup-goose-action@v1

- name: AI Analysis

env:

GOOGLE_API_KEY: ${{ secrets.GOOGLE_API_KEY }}

run: |

echo "Review these file changes:" > prompt.txt

cat files.txt summary.txt >> prompt.txt

# File names and stats. Not the actual code content.

goose run --instructions prompt.txt --no-session --quiet > analysis.md

- name: Upload Analysis

uses: actions/upload-artifact@v5

with:

name: ai-analysis

path: analysis.mdtier2-balanced-security.ymlBalanced approach: AI sees file scope and metadata, but not code diffs. See the full Tier 2 example

Tier 3 gives the AI full visibility into code diffs. It can detect subtle patterns, suggest contextual improvements, and provide the deepest analysis. It’s also the most vulnerable to prompt injection. Use Tier 3 only when your repo is 100% private and your team size is small enough that you trust everyone completely. The gain in AI insight comes at the cost of accepting injection risk.

name: AI Analysis - Advanced Patterns

on: [pull_request]

permissions:

contents: read

jobs:

analyze:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v6

- name: Get Full Diff

run: git diff origin/main...HEAD > changes.diff

- name: Setup Goose

uses: clouatre-labs/setup-goose-action@v1

- name: AI Analysis

env:

GOOGLE_API_KEY: ${{ secrets.GOOGLE_API_KEY }}

run: |

echo "Deeply analyze these code changes:" > prompt.txt

cat changes.diff >> prompt.txt

# Complete code diffs for maximum context and detail

goose run --instructions prompt.txt --no-session --quiet > analysis.md

- name: Upload Analysis

uses: actions/upload-artifact@v5

with:

name: ai-analysis

path: analysis.mdtier3-advanced-patterns.ymlMaximum context: AI sees full diffs for subtle patterns. See the full Tier 3 example

The comparison is straightforward. Each tier trades visibility for security. Tier 1 sacrifices some context to eliminate injection risk. Tier 2 accepts low risk for moderate context. Tier 3 prioritizes insight over security and should be rare.

Choose your tier based on three factors:

- Who has access to your repo (external contributors vs internal team)

- How much context AI needs (tool output vs full diffs)

- Your risk tolerance (injection risk vs deeper analysis)

Figure 2: Three security tiers. Choose based on your threat model and team trust level.

| Tier | Input | Injection Risk | Approval Gate | Typical Feedback Time | Recommended For |

|---|---|---|---|---|---|

| 1 | Tool output (JSON) | None | Human reviews artifact | 2-5 min | Public repos, OSS, any external contributors |

| 2 | File stats + metadata | Low | Human pre-approval | 1-3 min | Private repos, internal teams |

| 3 | Full code diff | Controlled | Optional | <60 sec | Tiny trusted teams only |

Table 1: Tier comparison: speed, risk, and recommended use.

The decision framework is simple: start at Tier 1. Measure your deployment velocity, security posture, and developer satisfaction. Only move to Tier 2 or 3 if your team consensus is that the additional AI context outweighs the injection risk. Most teams never need to leave Tier 1.

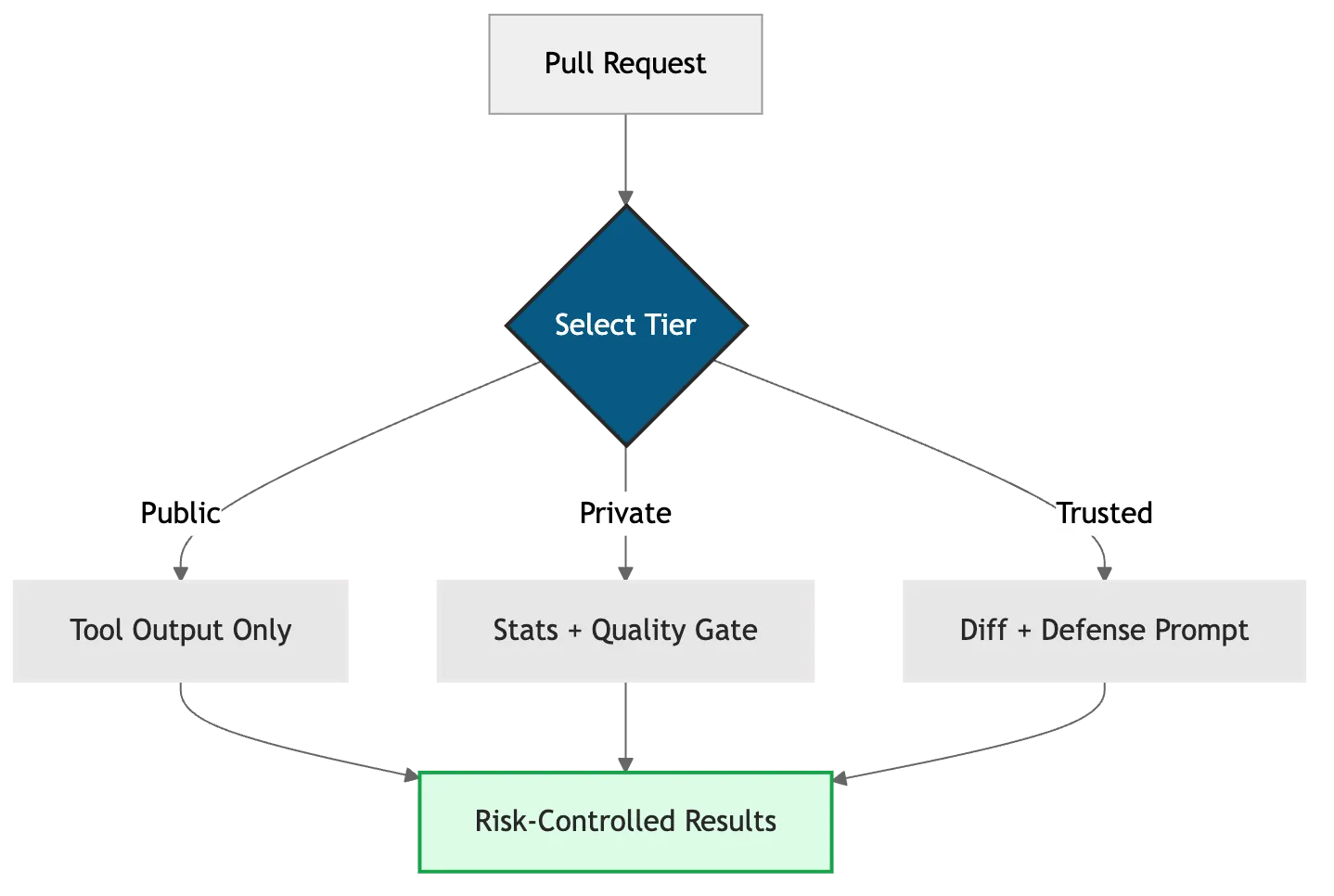

Evolution From Uncontrolled to Managed AI Analysis

The naive approach is to feed AI your code diff directly and let it comment on the PR. That’s fast, looks smart, and creates an injection surface. The improved approach layers security tiers on top, giving you a decision framework that matches your threat model.

Figure 3: Evolution from uncontrolled AI analysis to risk-managed tiers.

The shift is architectural, not just operational. You’re moving from “AI sees everything and decides” to “AI sees what’s safe and humans decide what matters.” That distinction is where security and speed both win.

What Outcomes Can You Expect from AI-Augmented CI/CD?

Typical first-review latency drops from 4–22 hours (Octoverse 2024) to under 5 minutes, a 50–250× reduction. Developers iterate faster because they see feedback immediately. CI/CD pipelines don’t stall waiting for human review availability. Your smartest engineers spend less time waiting and more time building.

Quality improves because AI catches patterns humans miss at 2 AM or during context-switching. Linting issues get flagged automatically. Security tool outputs get analyzed for severity and context. Fewer critical issues reach production because they’re caught earlier in the workflow.

For broader observability patterns in AI agent workflows, including legacy system integration, see AI agents in legacy systems.

Developer satisfaction increases when velocity and quality both improve. Engineers don’t feel blocked by the review process. They get comprehensive feedback without waiting. They trust the pipeline because it combines deterministic tools with AI insight and human judgment. That combination (automated speed plus human accountability) is what modern CI/CD needs.

The business outcome is measurable. Deployment frequency increases, mean time to resolution decreases, and security incidents reduce. The engineering team ships faster without sacrificing safety. That’s the value proposition of AI-augmented CI/CD done right.

Your Next Step: Start With Tier 1

Get the Tier 1 workflow (30-second setup): → https://github.com/clouatre-labs/setup-goose-action/blob/main/examples/tier1-maximum-security.yml

Measure your baseline: current review time, deployment frequency, security incident rate. Run for two weeks, then measure again.

Full action docs and examples:

- https://github.com/clouatre-labs/setup-goose-action (multiple AI models: Gemini, Claude, Llama)

- https://github.com/clouatre-labs/setup-kiro-action (AWS-native SIGV4 authentication, no API keys in secrets)

Your CI/CD can be faster and more secure. Not instead of humans. Alongside them. That’s where the business value lives.

References

- Boehm & Basili, “Software Defect Reduction Top 10 List” (2001) — https://www.cs.umd.edu/projects/SoftEng/ESEG/papers/82.78.pdf

- Forsgren et al., “DevEx in Action: A study of its tangible impacts” (2024) — https://dl.acm.org/doi/10.1145/3639443

- GitHub Octoverse 2024 — https://github.blog/news-insights/octoverse/octoverse-2024/

- OWASP LLM Top 10 (2025 edition) – Prompt Injection #1 — https://owasp.org/www-project-top-10-for-large-language-model-applications/

- Santos et al., “Modern code review in practice: A developer-centric study” (2024) — https://www.sciencedirect.com/science/article/pii/S0164121224003327