You run a mid-market company with SAP, mainframe, or AS400 systems that work but won’t win awards. The board wants AI. Your team wants modernization budgets. You’re stuck in the middle.

Every AI agent case study assumes clean APIs, cloud-native apps, and real-time data. Your world is batch jobs, COBOL, and integration layers built in 2003. The conventional answer is “modernize first, then AI.” That’s a 2-5 year, $5M-$50M bet before you prove a single dollar of AI value.

This post shows where AI agents make economic sense on top of legacy systems, how to measure ROI without enterprise-wide transformation, and which integrations work when your data lives in places LLMs have never heard of. You’ll walk away with a decision framework for identifying agent use cases that pay back in quarters, not years.

Table of contents

Contents

- Why Legacy Systems Became the #1 AI Adoption Obstacle

- The Reverse Modernization Strategy: Layer AI First, Upgrade Later

- How Do AI Agents Actually Integrate with Legacy Systems?

- Why Observability Infrastructure Is Non-Negotiable

- What ROI Can Mid-Market Companies Actually Expect?

- Why Do 40% of AI Agent Projects Still Fail?

- How to Start: A Practical Implementation Framework

- Why Should Your Board Fund This Now?

- References

Why Legacy Systems Became the #1 AI Adoption Obstacle

Legacy systems top the list of AI adoption obstacles, but the conventional fix is worse than the problem. Traditional modernization projects require multi-year timelines and eight-figure budgets before proving a single dollar of AI value. No wonder 40% of agentic AI projects will be canceled by 2027 (Gartner, 2025) due to escalating costs and unclear business value.

The real bottleneck isn’t legacy systems. It’s the false choice between “modernize everything” and “do nothing.” You need integration patterns that work with what you have.

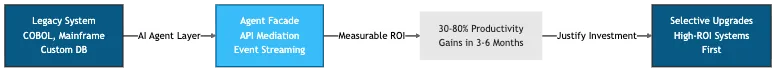

The Reverse Modernization Strategy: Layer AI First, Upgrade Later

Flip the conventional wisdom. Instead of modernizing first, layer AI agents over your existing systems. Capture ROI in months. Then use that ROI to fund selective modernization. This is reverse modernization: prove the value before you invest in infrastructure.

Atera reduced sales response times by 60% (World Economic Forum, 2026) by integrating AI agents with their existing CRM and ticketing systems. They didn’t rebuild their infrastructure. They built a layer on top. Armis accelerated RFP response capacity by 73% (AutoRFP, 2026) without adding headcount. Both companies proved the business case before investing in modernization.

Why This Works for Mid-Market Companies

Reverse modernization solves three problems at once. First, it avoids business disruption. Your legacy systems keep running while agents handle new work. Second, it delivers 30-80% productivity gains in 3-6 months. Third, it lets you start small. One workflow. One team. One agent. Then expand based on what works.

The risk profile is completely different. Modernization is a binary bet: succeed or lose years of investment. Agent layering is incremental. You prove value, fund upgrades, and repeat.

When Reverse Modernization Doesn’t Apply

Three scenarios require modernization first. End-of-life systems without vendor support expose you to compliance violations and security breaches (Cyber Snowden, 2026). Agent integration can’t fix missing security patches. Regulatory mandates that explicitly require infrastructure upgrades (e.g., PCI-DSS 4.0, GDPR data residency) make layering non-compliant. Systems scheduled for decommissioning within 12 months don’t justify integration investment. In these cases, accelerate modernization or sunset the system entirely.

For everything else, reverse modernization applies.

Figure 1: Reverse modernization flow (agents first, infrastructure later)

How Do AI Agents Actually Integrate with Legacy Systems?

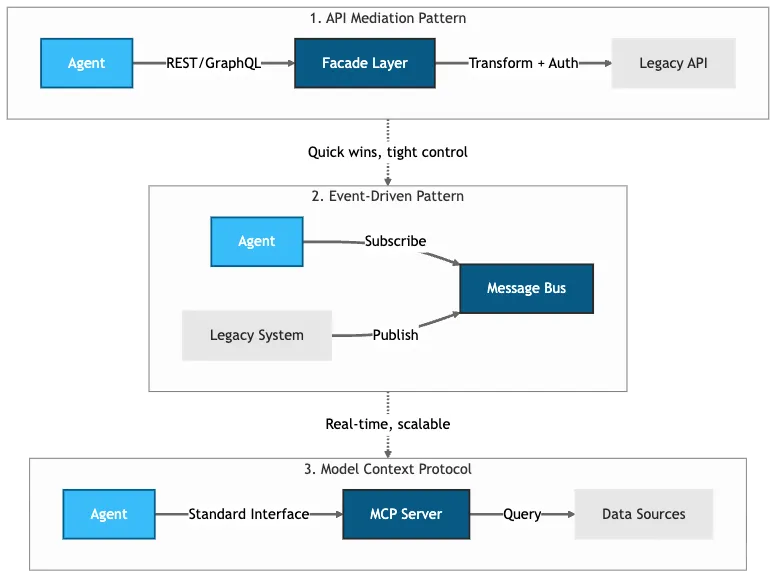

But integration is where most projects fail. Agents need access to data and business logic buried in legacy systems. You have three options. Each has tradeoffs.

API Mediation Layer

Build a facade that abstracts legacy complexity. Agents interact with clean, modern interfaces while the mediation layer handles authentication, data translation (EBCDIC to UTF-8, fixed-width to JSON), and error handling. When the legacy system changes, you update the facade, not the agents. You also get a single point for logging, monitoring, and compliance audits.

from fastapi import FastAPI

from pydantic import BaseModel

class Customer(BaseModel): # Modern JSON schema

id: str

name: str

balance: float

@app.get("/customers/{customer_id}", response_model=Customer)

async def get_customer(customer_id: str) -> Customer:

raw = legacy_client.call("CUSTINQ", customer_id.ljust(10))

return Customer(

id=raw[0:10].strip(),

name=raw[10:40].encode("cp037").decode("utf-8"), # EBCDIC to UTF-8

balance=int(raw[40:52]) / 100, # Packed decimal

)mediation/legacy_facade.pyCode Snippet 1: FastAPI facade translates COBOL fixed-width records to validated JSON.

Event-Driven Architecture

Legacy systems publish state changes through Dapr, which supports Kafka, Azure Event Hub, and others. Agents subscribe and react in near real-time. This pattern scales better than API mediation: the system pushes updates when they matter instead of agents polling constantly. Dapr’s abstraction avoids vendor lock-in.

The tradeoff: you need to instrument the legacy system to publish events, which isn’t trivial if the system is old and undocumented.

from dapr.ext.grpc import App

from cloudevents.sdk.event import v1

import json

app = App()

@app.subscribe(pubsub_name="legacy-events", topic="orders")

def handle_order(event: v1.Event) -> None:

data = json.loads(event.Data()) # CloudEvents envelope

# Agent processes order without polling legacy system

agent.process_order(data["order_id"], data["customer_id"])

app.run(6002)events/order_subscriber.pyCode Snippet 2: Dapr subscriber receives legacy system events via CloudEvents (swap Kafka/RabbitMQ/Azure without code changes).

Model Context Protocol (MCP)

Anthropic’s open standard for agent-to-data connections. You write one MCP server for your legacy system, and any agent can use it. No custom integration code for each agent. This matters when coordinating multiple agents, a problem I’ve written about in orchestrating multiple AI agents with subagent architecture.

from fastmcp import FastMCP # FastMCP 3.0

mcp = FastMCP("Legacy ERP")

@mcp.tool

def query_customer(customer_id: str) -> str:

"""Query customer from mainframe. Any MCP-compatible agent can call this."""

result = mainframe_client.execute(f"SELECT * FROM CUSTMAST WHERE ID='{customer_id}'")

return json.dumps(result)

if __name__ == "__main__":

mcp.run(transport="http", port=8000) # Remote agents connect via HTTPmcp/legacy_server.pyCode Snippet 3: FastMCP 3.0 server exposes legacy data to any MCP-compatible agent (one server, many agents).

Which Pattern Should You Choose?

| Pattern | Best When | Timeline |

|---|---|---|

| API Mediation | Stable APIs, 1-2 agents, tight control needed | 4-8 weeks |

| Event-Driven | 1,000+ transactions/hour, sub-second response | 8-12 weeks |

| MCP | 3+ agents, standardization priority | 6-12 weeks |

Table 1: Integration pattern selection guide

Figure 2: Three integration patterns for legacy systems

Why Observability Infrastructure Is Non-Negotiable

Whatever integration pattern you choose, log everything. Every integration call. Every agent decision. Every error. This isn’t optional.

Why Log Everything?

- Compliance: Auditors ask “why did your agent approve this transaction?” You need logs showing the decision path.

- Debugging: When an agent fails, trace which integration call failed, what data it received, and why it made the wrong decision.

- Improvement: You can’t optimize what you don’t measure.

Integrate with Prometheus, ELK, Splunk, or Datadog for production monitoring. Track three categories of metrics.

Integration Health Metrics

Monitor these in real-time dashboards: API latency (p50, p95, p99), error rates by type, and timeout frequency. These align with OpenTelemetry semantic conventions for GenAI, which define standard metrics like gen_ai.client.operation.duration and gen_ai.client.token.usage.

Agent Performance Metrics

These require deliberate instrumentation design. Task completion rate needs a definition of “complete” per task type (e.g., “ticket resolved without escalation”). User satisfaction comes from thumbs up/down on responses, escalation rate, and support ticket correlation. Decision accuracy is the hardest to measure, see below.

How Do You Measure Decision Accuracy?

Ground truth is often available. The question is where to find it.

For RAG systems, use categorized query benchmarks with validation subsets. Test accuracy by query type (conceptual, procedural, error lookup, multi-hop) since each fails differently.

For approval workflows, compare agent decisions against eventual outcomes. Was the approved invoice paid? Was the flagged transaction actually fraudulent? The business process itself provides ground truth.

When ground truth is unavailable, sample decisions for human or AI-assisted review. The question is: how many?

Sample 300-400 decisions monthly. This achieves +/-5% margin of error at 95% confidence regardless of total volume (Cochran, 1977). The math: n = (1.96² x 0.5 x 0.5) / 0.05² = 385. For systems under 500 decisions/month, review all or accept wider uncertainty.

Business Impact Metrics

Calculate these monthly against pre-deployment baselines. These are not real-time dashboard metrics:

| Metric | Formula |

|---|---|

| Response time reduction | Agent-handled avg vs. pre-deployment baseline |

| Throughput increase | Tickets/hour after vs. before deployment |

| Cost savings | (Hours saved x labor cost) - (API costs + infrastructure) |

Table 2: Business impact calculation formulas

These metrics prove ROI and guide your next investments. For deeper coverage of observability infrastructure in AI workflows, see AI-augmented CI/CD pipelines.

What ROI Can Mid-Market Companies Actually Expect?

The numbers are compelling. Let me walk through real examples.

Bank of America’s Erica reduced IT service desk calls by 50% (Bank of America, 2025) across 213,000 employees. Insurance companies using agentic AI reduced claims processing time from 9.6 days to 3.2 days (Superhuman, 2026), a 67% reduction.

Atera’s 60% improvement in sales response times translates to faster deal closure. Armis’s 73% increase in RFP response capacity means the same team handles 73% more business. Both captured these gains without hiring.

BCG reports AI can reduce core insurance modernization costs by 30-50% (BCG, 2026). Agents pay for themselves, then fund the upgrades.

| Company | Pattern | Metric | Result |

|---|---|---|---|

| Atera | API Mediation | Sales Response Time | 60% reduction |

| Armis | API Mediation | RFP Response Capacity | 73% increase |

| Bank of America | API Mediation | IT Service Desk Calls | 50% reduction |

| Insurance Industry | Event-Driven | Claims Processing Time | 67% reduction (9.6 -> 3.2 days) |

Table 3: ROI examples across integration patterns (note: API Mediation dominates early wins due to faster implementation)

Why Do 40% of AI Agent Projects Still Fail?

Projects fail when teams skip fundamentals.

Unclear Business Value. Gartner predicts over 40% of agentic AI projects will be canceled by 2027 due to escalating costs, unclear value, and inadequate risk controls. Launching with vague goals like “improve productivity” makes it impossible to measure success. Define exact metrics before development: “Reduce invoice processing time from 8 days to 2 days while maintaining 99.5% accuracy” is measurable; “handle invoices better” is not.

Security Vulnerabilities. Prompt injection attacks are ranked #1 in OWASP 2025 Top 10 for LLMs (OWASP, 2025). Treat agents as privileged service accounts with these controls:

- Tool allowlisting (no arbitrary network/file access)

- Schema validation on tool inputs/outputs

- Output sanitization (no untrusted content forwarded)

- Secrets isolation (no secrets in prompts; short-lived tokens)

- Rate limiting + anomaly detection

- Approval gates for high-impact actions

- Audit logs (immutable, centralized)

A compromised agent can make thousands of requests per minute. For deeper coverage, see AI supply chain security risks.

Governance Retrofitting. Adding compliance controls after deployment requires painful redesigns. Plan audit trails, role-based access, and compliance testing from the start.

How to Start: A Practical Implementation Framework

Step 1: Set Specific Targets. Pick one workflow with high volume, predictable, with success metrics, and low regulatory risk. Good first candidates: customer support routing, RFP response compilation, IT service desk triage, or invoice processing.

Step 2: Audit Data Quality. Check for duplicates, format inconsistencies, missing values, and access permissions. Fix the top three issues. Aim for 80% clean data, not perfection.

Step 3: Choose Your Integration Pattern. API mediation for stable APIs and 1-2 agents (4-8 weeks). Event-driven for 1,000+ transactions/hour (8-12 weeks). MCP for 3+ agents or standardization priority (6-12 weeks).

Step 4: Build Observability from Day One. Track integration health (latency, error rates), agent performance (completion rate, accuracy), and business impact (your target KPI). Set alerts for error rates above 5%, latency p95 spikes, and approval override surges.

Step 5: Start with Single-Agent Workflows. Run your first agent in shadow mode for 2-4 weeks (longer for complex workflows). Compare agent decisions against human decisions. Exit criteria: Switch to production when accuracy exceeds 95% (adjust based on cost-of-failure analysis). Production gate: Error rate below 5% and manual override rate trending down. Expansion gate: KPI sustained for 4 weeks with no Sev-1 incidents.

Step 6: Fund Modernization with Agent ROI. Track which legacy systems create the most integration friction. If agents generate $200K annual savings, allocate 30-50% to infrastructure upgrades. This creates a self-funding cycle.

Why Should Your Board Fund This Now?

ROI before major infrastructure investment: your board sees results next quarter, not in three years.

Competitive advantage: sales teams respond 60% faster, RFP teams handle 73% more business, service desks handle 50% more calls. While competitors wait for budgets, you’re winning deals. Risk mitigation: agents layer over existing systems with no business disruption.

Explore how subagent architectures can orchestrate multiple AI agents without coordination complexity.

References

- AutoRFP, “RFP AI Agents: Revolutionizing How Companies Win More Deals in Less Time” (2026) — https://autorfp.ai/blog/rfp-ai-agents-revolutionizing-how-companies-win-more-deals-in-less-time

- Bank of America, “A Decade of AI Innovation: Erica Surpasses Milestones” (2025) — https://newsroom.bankofamerica.com/content/newsroom/press-releases/2025/08/a-decade-of-ai-innovation—bofa-s-virtual-assistant-erica-surpas.html

- BCG, “Agentic AI Power Core Insurance AI Modernization” (2026) — https://www.bcg.com/publications/2026/agentic-ai-power-core-insurance-ai-modernization

- Cochran, W.G., Sampling Techniques, 3rd ed. (1977) — https://en.wikipedia.org/wiki/Sample_size_determination

- Cyber Snowden, “Difference Between End of Life and Legacy Cyber Security” (2026) — https://cybersnowden.com/difference-between-end-of-life-and-legacy-cyber-security/

- Gartner, “Gartner Predicts Over 40% of Agentic AI Projects Will Be Canceled by End of 2027” (2025) — https://www.gartner.com/en/newsroom/press-releases/2025-06-25-gartner-predicts-over-40-percent-of-agentic-ai-projects-will-be-canceled-by-end-of-2027

- OpenTelemetry, “Semantic Conventions for Generative AI” (2024) — https://opentelemetry.io/docs/specs/semconv/gen-ai/

- OWASP, “LLM01:2025 Prompt Injection” (2025) — https://genai.owasp.org/llmrisk/llm01-prompt-injection/

- Superhuman, “AI Agent Useful Case Studies” (2026) — https://blog.superhuman.com/ai-agent-useful-case-studies/

- World Economic Forum, “AI Mid-Market Business Growth” (2026) — https://www.weforum.org/stories/2026/01/ai-mid-market-business-growth/